Spring 2026

IOTA 5507/6910I Info Theory & Its Applications in ML

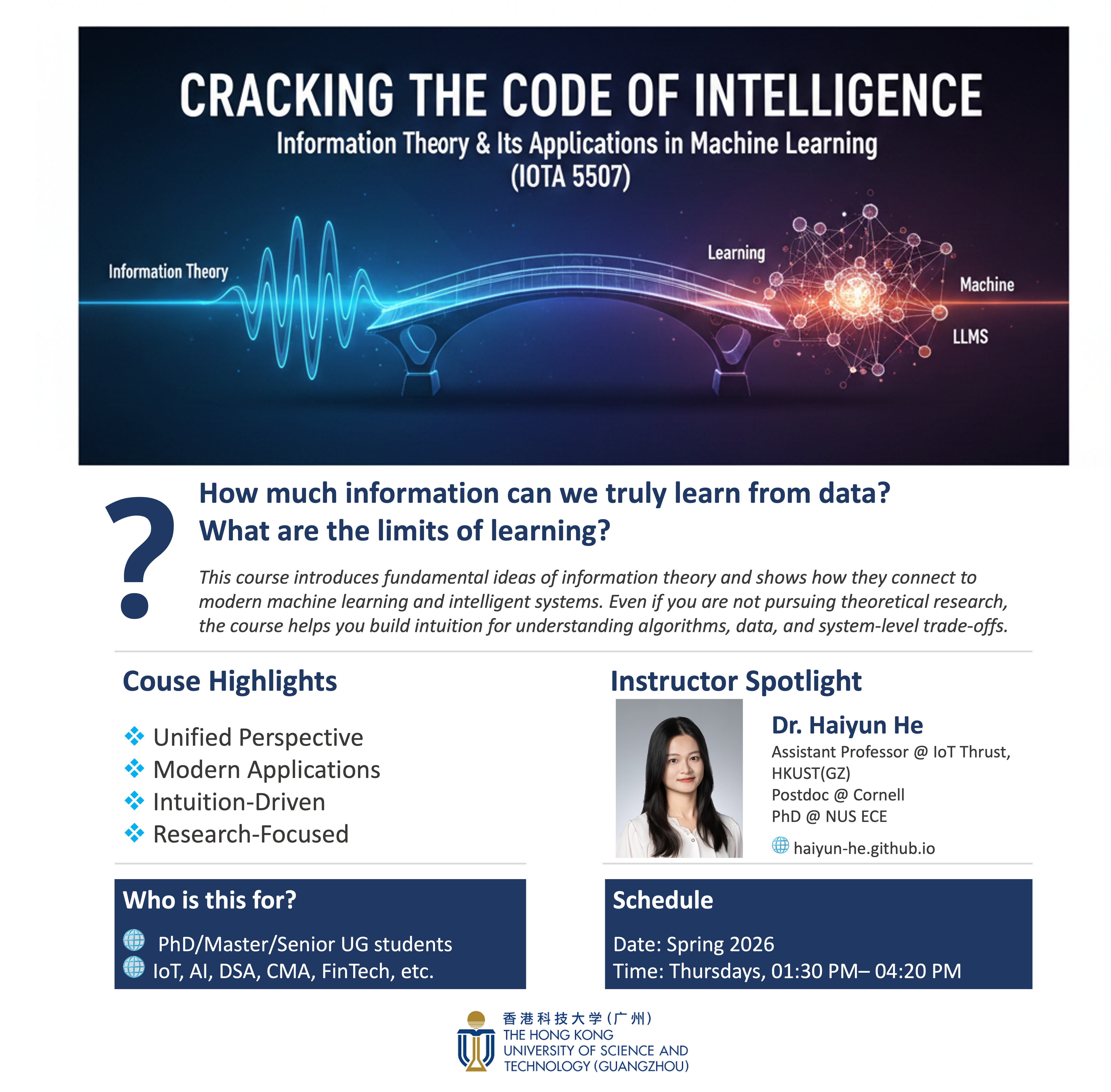

Spring 2026 IOTA 5507/6910I Information Theory and Its Applications in Machine Learning

Course Website: https://hkust-gz.instructure.com/courses/3217

- Lecture time: 1:30-4:20 pm every Thursday

- Classroom: W1-222

- Office hour: TBD

Information

How much information can be compressed, transmitted, extracted, and learned from data, and what are the fundamental limits of these processes? This course explores these questions by introducing the fundamentals of information theory and its modern applications in machine learning and statistics. We begin with classical foundations–entropy, divergences, compression, coding, and data-processing inequalities–and extend them to modern topics in inference, hypothesis testing, and learning. Applications such as generalization bounds and statistical estimation illustrate how information theory characterizes performance limits and guides system design. By the end, students will gain both the tools and intuition to apply these methods to contemporary problems in data and learning. They will also develop a new and unified perspective that connects theory with practice across diverse fields, such as AI, data science, and computational modeling.

Prerequisites

Familiarity with linear algebra, basic knowledge in probability theory, and some experience with Python or Matlab are expected.

Outline (Tentative)

- Week 1: Introduction to information theory, recap on required basic probability

- Week 2: Information measures: entropy, divergence, mutual information

- Week 3: Sufficient statistics, data-processing, convex duality, and variational characterizations

- Week 4: f-divergences

- Week 5: Asymptotic equipartition property (AEP)

- Week 6: Lossless data compression, fundamental limit and scheme

- Week 7: Channel coding, basic results, channel capacity

- Week 8: Binary hypothesis testing, Neyman-Pearson lemma

- Week 9: Method of types, hypothesis testing asymptotics

- Week 10: Information bottleneck/ Lower bounds in statistical and learning

- Week 11: Lower bounds in statistical and learning

- Week 12: Generalization bounds

- Week 13: Contemporary topics in machine learning and LLMs

Course Assessment

- Homework (30%)

- Mid-Term Exam (30%)

- Final Exam (40%)

Textbooks and readings

We will not require students to purchase any textbook; the information you need to know will be posted as lecture notes. However, we heartily recommend all of the following, and will be drawing on ideas from many of these:

- [1] Thomas M. Cover & Joy A. Thomas, Elements of Information Theory, 2nd ed., Wiley, 2006.

- [2] Yury Polyanskiy & Yihong Wu, Information Theory: From Coding to Learning, Cambridge University Press, 2025.